What is RAG?

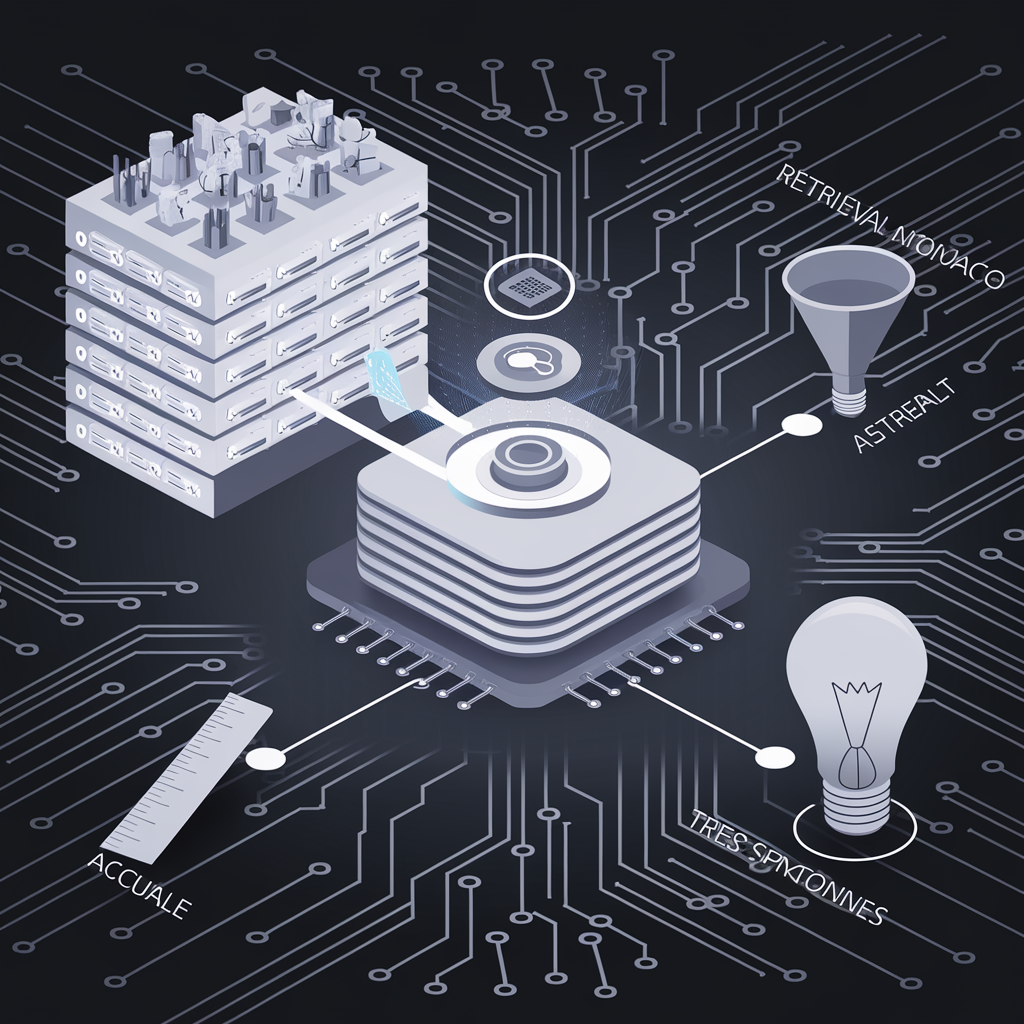

RAG stands for Retrieval Augmented Generation. It’s an advanced technique that combines information retrieval with language model generation to improve the accuracy, relevance, and factual grounding of AI-generated text.

RAG represents a significant advancement in AI text generation, combining the power of large language models with the precision of information retrieval systems. This synergy allows for more reliable, informative, and contextually appropriate AI-generated responses.

To RAG or not to RAG?

When RAG is useful:

- Handling Evolving Information: RAG is particularly useful when dealing with information that changes rapidly. This makes RAG ideal for applications that need to stay current with evolving facts or events.

- Reducing Hallucinations: When accuracy is crucial and you want to minimize the risk of the model generating plausible but incorrect information, RAG can be very beneficial.

- Enhancing Accuracy and Relevancy:

RAG can enhance the accuracy, controllability, and relevancy of the LLM’s response. This is particularly useful in domains where precise and relevant information is critical.

- Enhancing Accuracy and Relevancy:

- Integrating New Knowledge:

RAG is also useful for integrating new knowledge. If your application needs to incorporate fresh information without retraining the entire model, RAG is an excellent choice.

- Integrating New Knowledge:

When RAG might not be necessary:

- Static Knowledge Domains: In fields where information doesn’t change rapidly, the benefits of RAG might be less pronounced. The model’s built-in knowledge might be sufficient.

- Simple, General Queries: For basic questions or tasks that don’t require specific, up-to-date information, a standard LLM without RAG might perform adequately.

- Creative or Generative Tasks: In scenarios where the goal is to generate creative content (like stories or poetry) rather than factual information, RAG might not provide significant benefits.

ExpressifAI: Bridging Flexibility and Precision in AI-Assisted Research

ExpressifAI offers two distinct modules that cater to different user needs: Freestyle and Akademia. Let’s explore how these modules utilize Retrieval Augmented Generation (RAG) to enhance user experience and research capabilities.

Freestyle module: adaptable conversation with optional RAG

The Freestyle module is designed to be versatile, functioning as both a standard chatbot and a RAG-enhanced assistant. Here’s how it works:

- Standard Chatbot Mode: By default, Freestyle operates like a typical AI chatbot, drawing upon its built-in knowledge to answer user queries. This mode is perfect for general conversations or when specific document references aren’t necessary.

- Naive RAG Implementation: When users upload documents to the chat context, Freestyle switches to a Naive RAG approach. This means: a) The system indexes the uploaded documents. b) When a user asks a question, Freestyle searches these documents for relevant information. c) It then combines this retrieved information with the user’s query. d) Finally, it generates a response based on both its internal knowledge and the document content.

Akademia module: advanced RAG for in-depth research

The Akademia module is designed with academic research in mind and employs a more refined RAG approach Its advanced techniques encompass several key components:

- Query decomposition: When a user presents a complex question Akademia breaks it down into smaller more manageable sub-questions This allows for a more thorough exploration of each aspect of the query

- Reranking: after retrieving relevant information from the document set Akademia does not simply use the first results it finds Instead it reexamines the retrieved information ranking it based on how well it matches the specific sub-questions This helps ensure that the most relevant information is prioritized in the response

- Recursive answering: It does not stop at a single round of retrieval and answering Instead it uses the initial answers to generate follow-up questions diving deeper into the topic This recursive process continues until a comprehensive answer is formulated

Akademia is particularly adept at handling complex research questions that require in-depth analysis It provides more nuanced and comprehensive answers It explores multiple perspectives on a given topic And it offers a more thorough examination of available sources.

Conclusions

As AI becomes more central to our lives, RAG and similar techniques will continue to evolve, offering exciting possibilities for enhancing our interaction with information and understanding of the world. This ongoing development promises to transform how we interact with AI systems, leading to more intuitive, accurate, and adaptive intelligent assistance.